Top Things To Learn About Traffic Bot

The internet is swamped with bots that can perform a wide range of tasks. This can be as simple as placing an order for groceries to more complex things such as answering customer queries online.

This piece of technology has undoubtedly changed the way people do their work. For many businesses with an online presence, automation has brought many benefits. Not only can they work faster, but cut expenses for human assistants and make more profits.

With all this buzz around this topic, something that is being mentioned a lot is the traffic bot. This type of bot can behave like a human according to SmartHub. So it is a huge worry for many websites.

This article will provide a detailed explanation of how traffic bots impact websites and what can be done about it. Also, learn How To Generate Leads With Opt-In Pages with this extensive guide.

Are Bots Bad?

It may seem that there is nothing wrong with bots at first glance, right? Automation of many everyday work has improved a lot of things in the personal and professional arena. However, these robots do pose a problem for website owners in many varying cases.

One of the main reasons why this tech is effective is because they are designed to mimic human behavior. That means, you can program a bot to perform a particular work, and this will be done usually in a faster and more efficient manner. This helps those websites that are looking for more traffic flow to improve their business.

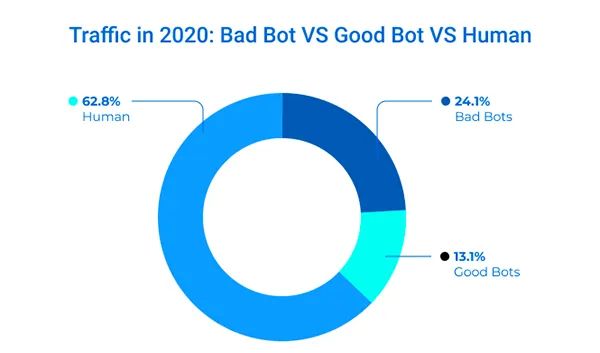

This organic traffic could potentially be converted into customers and, therefore, lead to increased profits. Also, robot traffic comes from non-human users. It has become very well-known with nearly 30.2% of traffic generated through automation.

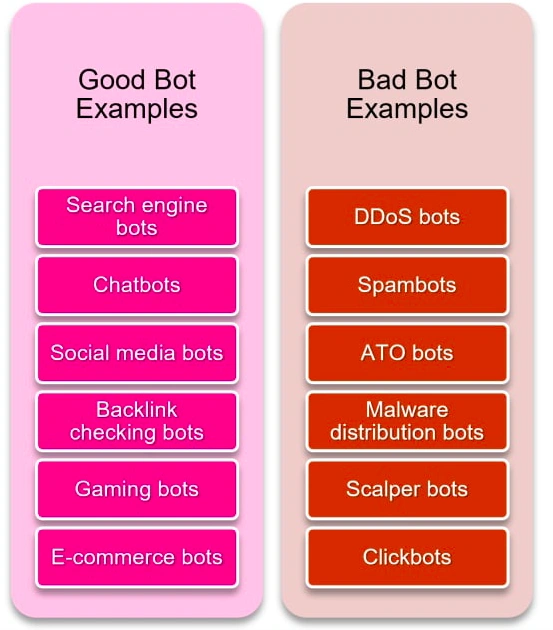

This has increased over the past 5 years exponentially. Is this a harmful thing for your website? It is relevant to understand that there are good and bad bots.

FUN FACT

Bots are written scripts that programmers create using AIML or Artificial Intelligence Markup Language.

So What Exactly Does It Mean When A Traffic Bot Is Good?

It all starts with the intended purpose during the creation phase of integrating automation. What the designers intended the robot to do makes a difference.

For instance, good bots are programmed to do work such as:

- Personal assistants

- Provide digital services

Good bots won’t cause any harm to a website but provide the business with the necessary leverage. The simplest example of good traffic bots is web crawlers that make it easier for search engines to provide the information anyone is looking for.

The harmful ones on the other hand will disrupt a website because they are created with malicious intent. For example, spam emails can cause harm to a computer and steal personal data.

There are many bad bots that you should be on the lookout for. These include:

- Ticket bots

- Email scrapers

- Comment bots

- Scrapers

How To Stop Bots

The internet is crowded with bad bots that mean stealing and misusing personal and professional data. These can be misleading with a sudden increase in organic traffic. Some contain malware that can compromise your data and also risk that of customers.

This malicious traffic can affect web performance and increase security risks. It will deter people from visiting this site.

So what can you do about this? Luckily there are several ways of stopping them.

Early Detection

The easiest way to go about this is by detecting the bots before they reach a website and saving a lot of time and hassle.

Early detection will also reduce the amount of strain put on a server because it also contributes to unnecessary storage. Remember that the more burden put on a server will require more energy.

One of the most effective ways of doing this is by blocking certain IP addresses. So regular monitoring of the traffic on a website is necessary. Once you notice any unusual IP address, block it immediately. This strategy is effective although it may require more time.

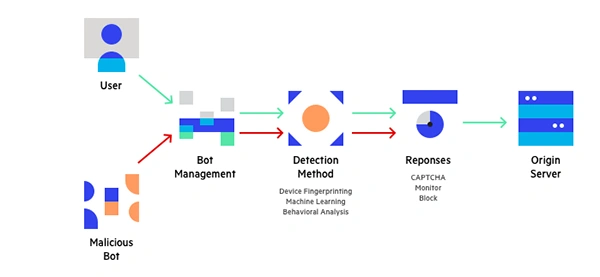

If there is not enough time to prioritize and work on it, then use an effective blocking tool. Several bot management tools help by screening traffic and identifying which bots are bad. Through AI, these solutions will automatically analyze and eliminate any hack bots coming to a website.

Use Security Plugins

Prevent the infiltration of bots on a website by installing security plugins. These tools are designed to enhance security on websites that are created with WordPress. Most plugins can not only monitor but also stop bots that may harm the website. The more advanced types can block hackers automatically.

Some will only show the source of the harmful robot traffic, leaving you to make the final decision. The bot management tools have several advantages. They use technology that monitors behavior, and requests rates, and detects anomalies in servers.

In this way, it becomes easier to pick out which sources or visitors are suspicious. The benefits include

- Early recognition of malware

- Eliminating bad bots while maintaining good ones

- Reducing the risk of false alarms on bad bots

- Reducing costs associated with more energy consumption from servers

- Providing regular updates on traffic

Take a look at the graph below that shows that even though humans are using automation for their benefits, the percentage of bad bots is higher than the good ones.

Limit the Crawl Rate

While not all traffic bots are harmful, they may interfere with a website and make it useless. You can decide to keep the good ones to work as crawlers.

Before you do this, consider if web crawling is something a site needs for improvement. Also, consider if crawling will benefit your servers and expenses.

If crawlers are not required, get rid of good traffic bots that may be leading to website lags. Limiting the Crawl rate is another alternative that optimizes a site. It limits the frequency of site visits immediately. Once you set a limit, monitor how effective it is.

Start at a specific delay, for example, 20 seconds. If there is a noticeable delay, then adjust accordingly to avoid any negative effects.

Final Thoughts

The world of computation has spread so wide that identifying good from bad bots has become necessary. The only way to get genuine traffic is by keeping the harmful traffic bots out. It can be done by using security plugins that also reduce the risks.

The best way to keep bots away is through continuous monitoring and analysis. There are so many tools available that will not only detect but eliminate bot traffic. They do this automatically. Others will simply detect the traffic created by bad bots and the location from where they are generated. You will have to make the final decision on what to do with the bots.